0. 本地调试

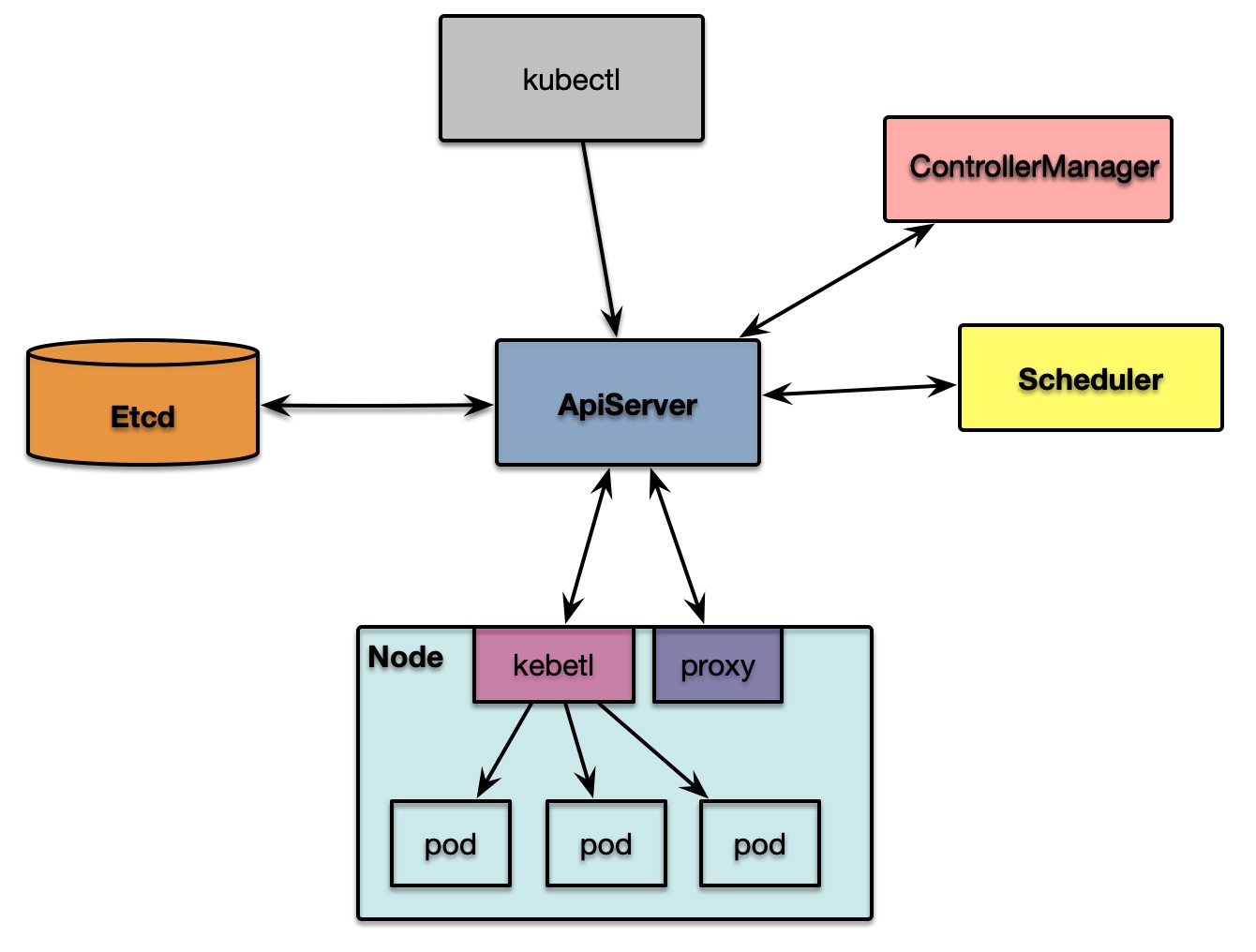

k8s各组件之间的交互需要用到加密通信,如果简单配置各组件的启动参数,相互之间无法正常通信。本节将介绍如何在配置进而可以在本地进行debugk8s集群。

0.1 本地运行调试

k8s有个文件hack/local-up-cluster.sh用于起一个本地k8s集群,而该脚本生成的证书等同样可以用于ideadebug时使用。这里进行了以下修改:将执行组件的命令,转换成输出命令参数echo命令见附件^1。

0.2 远程debug

还有一种方式则是在另一台机器上运行一个k8s集群,通过dlv来远程debug,可以参考此篇: 搭建k8s的开发调试环境

附件

[^1]: 本地生成密钥以及组件命令参数

1 | !/usr/bin/env bash |